Foreign Accent and Forensic Speaker Identification in Voice Lineups: The Influence of Acoustic Features Based on Prosody

Summary

This research aimed to make a comparison between L1-L2-English and L1-L2 Portuguese to check how much the effect of a foreign accent accounts for both metrics and prosodic-acoustic parameters, as well as for the choice of the target voice in a voice lineup.

Abstract

This research aims to examine both the prosodic-acoustic features and the perceptual correlates of foreign-accented English and foreign-accented Brazilian Portuguese and check how the speakers' productions of foreign and native accents are correlated to the listeners' perception. In the Methodology, we conducted a speech production procedure with a group of American speakers of L2 Brazilian Portuguese and a group of Brazilian speakers of L2 English, and a speech perception procedure in which we performed voice lineups for both languages.For the speech production statistical analysis, we ran Generalized Additive Models to evaluate the effect of the language groups on each class (metric or prosodic-acoustic) of features controlled for the smoothing effect of the covariate(s) of the opposite class. For the speech perception statistical analysis, we ran a Kruskal-Wallis test and a post-hoc Dunn's test to evaluate the effect of the voices of the lineups on the scores judged by the listeners. We nevertheless conducted acoustic (voice) similarity tests based on Cosine and Euclidean distances. Results showed significant acoustic differences between the language groups in terms of variability of the f0, duration, and voice quality. For the lineups, the results indicated that prosodic features of f0, intensity, and voice quality correlated to the listeners' perceived judgments.

Introduction

The accent is a salient and dynamic aspect of communication and fluency, both in the native language (L1) and in a foreign language (L2)1. Foreign accent represents the L2 phonetic features of a target language, and it can change (over time)in response to the speaker’s L2 experience, speaking style, input quality, exposition, among other variables. A foreign accent can be quantified as a (scalar) degree of difference between L2 speech produced by a foreign speaker and a local or reference accent of the target language2,3,4,5.

This research aims to examine both the prosodic-acoustic features and the perceptual correlates of foreign-accented English and foreign-accented Brazilian Portuguese (BP), as well as to check to which extent the speakers' productions of foreign and native accents are correlated to the listeners' perception. Prior research in the forensic field has demonstrated the robustness of vowels and consonants in foreign accent identification as either being stable for a long-term analysis of an individual (The Lo Case6) or referring to the high ID accuracy of a speaker (The Lindbergh Case7). However, the exploration of prosodic-acoustic features based on duration, fundamental frequency (f0, i.e., the acoustic correlate of pitch), intensity, and voice quality (VQ) has gained increasing attention8,9. Thus, the choice for prosodic-acoustic features in this study represents a promising avenue in the forensic phonetics field8,10,11,12,13.

The present research is supported by studies dedicated to foreign accents as a form of voice disguise in forensic cases14,15, as well as in the preparation of voice lineups for speaker recognition16,17. For instance, speech rate played an important role in the identification of German, Italian, Japanese, and Brazilian speakers of English18,19,20,21,22. Besides speech rate, long-term spectral and f0 features challenge L2 proficient speakers with familiarity with the target language because the brain and cognitive bases suffer a deficit in memory, attention, and emotion, reflecting in the speaker's phonetic performance during long speech turns23. The view of foreign accents in the forensic field is that the definition of what really sounds like a foreign accent depends much on the listener's unfamiliarity rather than having a none-to-extreme degree of foreign-accented speech24.

In the perceptual domain, a common forensic tool used since the mid-1990s for criminals' recognition is the Voice Lineup (auditory recognition), which is analogous to visual recognition used to identify a perpetrator in a crime scene16,25. In a voice lineup, the suspect's voice is presented alongside foils-voices similar in sociolinguistic aspects such as age, sex, geographical location, dialect, and cultural status-for identification by an earwitness. The success or failure of a voice lineup will depend on the number of voice samples and the sample durations25,26. Furthermore, for real-world samples, it is considered that the audio quality consistently impacts the accuracy of voice recognition. Poor audio quality can distort the unique characteristics of a voice27. In the case of voice similarity, fine phonetic detail based on f0 can confuse the listener during voice recognition28,29. Such acoustic features extend beyond f0 and encompass elements of duration, and spectral features of intensity and VQ30. This view of multiple prosodic features is crucial in the context of forensic voice comparison to ensure accurate speaker identification9,14,15,29,31.

In summary, studies in forensic phonetics have shown some variation regarding foreign accent identification over the last decades. On the one hand, a foreign accent does not seem to affect the process of identifying a speaker32,33 (especially if the speaker is unfamiliar with the target foreign accent34). On the other hand, there are findings in the opposite direction12,34,35.

Protocol

This work received approval from a human research ethics committee. Furthermore, informed consent was obtained from all participants involved in this study to use and publish their data.

1. Speech production

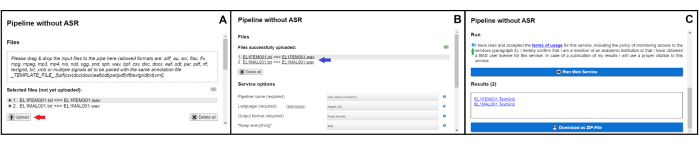

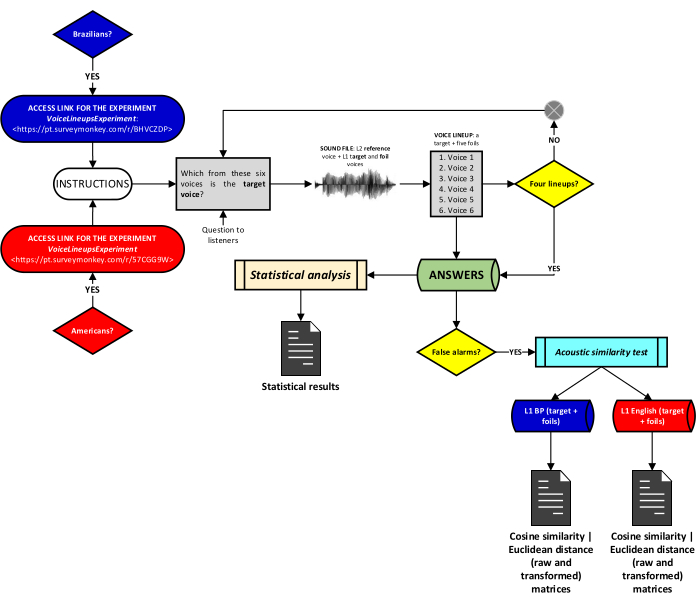

NOTE: We collected speech from a reading task on both 'L1 English-L2 BP' produced by Group 1: The American English (from the U.S.A.) Speakers (AmE-S), and on both 'L1 BP-L2 English' produced by Group 2: The Brazilian Speakers (Bra-S). See Figure 1 for a flowchart for speech production.

Figure 1: Schematic flowchart for speech production. Please click here to view a larger version of this figure.

- Participants

- Determine the number of participants, the language (L1 English, L2 BP; L1 BP, L2 English), the sex (female or male), the age (mean, standard deviation), the group characteristics (professionals or undergraduate students), and the L2 proficiency level (advanced or well-advanced) for each group.

NOTE: For the present research, both AmE-S and Bra-S were considered L2 proficient (both groups were qualified as B2-C136). The AmE-S had lived for two years in Brazil when the procedures were conducted. The Bra-S had lived more than two years in the U.S.A when the procedures were conducted. While living abroad, both groups used to speak their L2 for studying and working purposes at least 6 days a week for ~4-5 h a day. - Allocate the participants to a comfortable and quiet room and present the reading material for each group.

- Determine the number of participants, the language (L1 English, L2 BP; L1 BP, L2 English), the sex (female or male), the age (mean, standard deviation), the group characteristics (professionals or undergraduate students), and the L2 proficiency level (advanced or well-advanced) for each group.

- Data collection

NOTE: Speech data must be collected from a reading task in the following languages: L1-English, and L2-BP; L1-BP, and L2-English. Let the participants read the texts beforehand if necessary.- Recording procedures

- Record the speech data in a quiet place with appropriate acoustic conditions.

- Use a digital voice recorder (see the Table of Materials)37 and a unidirectional electromagnetic-isolated cardioid microphone (see the Table of Materials)38.

- Record the audio data in '.wav' form.

- Set up the sampling rate at 48 kHz and the quantization rate at 16 bits.

NOTE: The audio format and the configuration for the sampling and quantization rates described in steps 1.2.1.3 and 1.2.1.4 are applied to ensure high quality and noise reduction to preserve the spectral features used for later acoustic analysis.

- Recording procedures

- Acoustic analysis

NOTE: Divide acoustic analysis procedures into three steps: forced-alignment, realignment, and acoustic feature extraction.- Write the linguistic transcription (in a '.txt' file) for each audio file.

- Tag the pair of '.txt'/'.wav' files with the same name (i.e., 'my_file.wav'/ 'my_file.txt').

NOTE: To enhance the performance of the procedure outlined in section 1.3.7, it is highly recommended that the initial three characters of the ‘.txt/.wav’ file tags represent the Language, Dialect, or Accent, while the fourth to sixth characters denote the Sex (e.g., EL1FEM for English L1 Female). From the seventh character onward, the user should indicate the speaker number (e.g., 001 for the first speaker). Consequently, the first ‘.txt/.wav’ pair is EL1FEM001. - Create a folder for each L1-L2 language.

NOTE: A folder for L1-L2 English and a folder for L1-L2 BP. - Certify that all file pairs of the same language are in the same folder.

- Conduct the forced alignment.

- Access the web interface of Munich Automatic Segmentation (MAUS) forced aligner (webMAUS)39 at https://clarin.phonetik.uni-muenchen.de/BASWebServices/interface/Pipeline.

- Drag and drop each pair of .wav / .txt files from the folder to the dashed rectangle in Files (or click inside the rectangle, Figure 2A).

- Click the Upload button to upload the files into the aligner (see red arrow in Figure 2A).

- Select the following options in the Service options menu (Figure 2B): G2P-MAUS-PHO2SYL for Pipeline name; English (US) (for Language) if L1-L2 English data; Italian (IT) (for Language) if L1-L2 BP data.

NOTE: We chose 'Italian' for the BP data because webMAUS does not provide pretrained acoustic models for BP forced alignment. The phonetic literature poses that Italian phonology has a somewhat comparable symmetric seven-vowel inventory, just like BP40, as well as consonantal acoustic similarities41,42. - Keep the default options for 'Output format' and 'Keep everything'.

- Check the Run option box for accepting the terms of usage (see green arrow in Figure 2C).

- Click the Run Web Service button to run the uploaded files in the aligner.

NOTE: For each audio file, MAUS forced aligner returns a Praat TextGrid object (a Praat pre-formatted '.txt' file containing the annotation of words, phonological syllables, and phones based on the linguistic transcription extracted from the '.txt' file described in step 1.3.1). - Click the Download as ZIP-File button to download the TextGrid files as a zipped file (Figure 2C).

NOTE: Make sure that the zipped TextGrid files are downloaded in the same folder as the audio files. - Extract the TextGrid files for later realignment in the phonetic analysis software43.

- Conduct the realignment.

- Access and download the script for Praat VVUnitAligner44 from https://github.com/leonidasjr/VVunitAlignerCode_webMAUS/blob/main/VVunitAligner.praat.

- Certify that all file pairs of the same language and the VVUnitAligner script are in the same folder.

NOTE: A folder for the L1-L2 English files and the VVunitAligner, and a folder for the L1-L2 BP files and the VVunitAligner. - Open the phonetic analysis software.

- Click Praat | Open Praat script… to call the script from the object window.

- Click the Run button once.

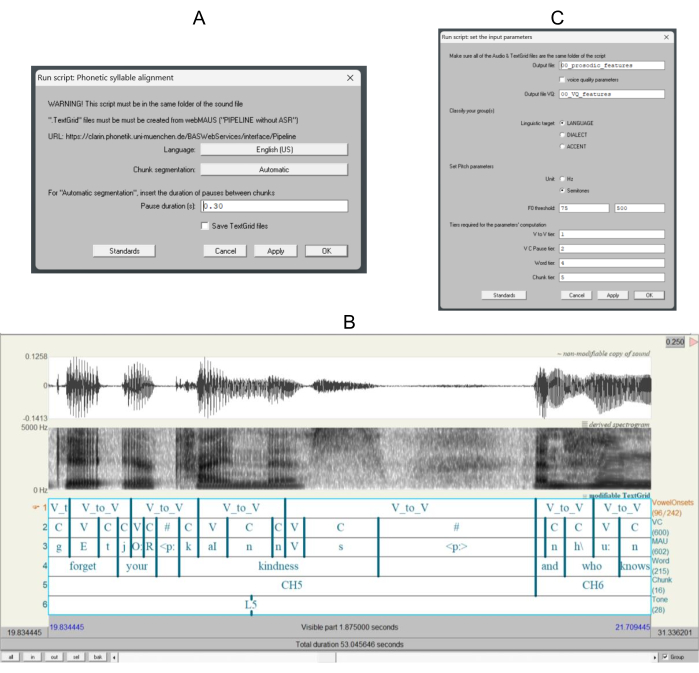

NOTE: A form called Phonetic syllable alignment containing the settings for using the script will pop up on the screen (Figure 3A). - Click the Language button to choose from 'English (US),' 'Portuguese (BR),' 'French (FR),' or 'Spanish (ES)' languages.

- Click the Chunk segmentation button to choose from 'Automatic,' 'Forced (manual),' or 'None' segmentation procedure.

- Check the Save TextGrid files option to automatically save the new TextGrid files.

- Click Ok | Run buttons for a realignment of the phonetic units from step 1.3.5.7 .

NOTE: For each audio file, VVUnitAligner will generate a new TextGrid file for section 1.3.7 (Figure 3B).

- Conduct the automatic extraction of the acoustic features.

- Access and download the script SpeechRhythmExtractor45 from https://github.com/leonidasjr/SpeechRhythmCode/blob/main/SpeechRhythmExtractor.praat for automatic extraction of the prosodic-acoustic features.

- Create a new folder and put SpeechRhythmExtractor along with all pairs of audio/TextGrid files of all languages.

- Open the phonetic analysis software.

- Click Praat | Open Praat script… to call the script from the object window.

- Click the Run button only once.

NOTE: A form containing the script settings will pop up on the screen. In the boxes of the output '.txt' file names, rename the files accordingly or leave the default names. - Check the voice quality parameters option to save the Output file VQ for voice quality (Figure 3C).

NOTE: This second output file (the Output file VQ) contains the parameters of the difference between the 1st and the 2nd harmonics (H1-H2) and the Cepstral Prominence Peak (CPP)9. - Check the Linguistic target option to choose from the labels 'Language,' 'Dialect,' or 'Accent' (Figure 3C).

- Check the Unit option to choose the f0 features in Hz or in Semitones (Figure 3C).

- Set up in the values for F0 threshold, the minimum and maximum f0 thresholds (Figure 3C).

NOTE: Unless the research has specific purposes or pre-set specific audio features, it is strongly recommended to leave the parameters of step 1.3.7.9 with the default values. - Click Ok | Run for the automatic extraction of the acoustic features .

NOTE: The script SpeechRhythmExtractor returns a tab-delimited '.txt' file (Output file/ Output file VQ) containing the acoustic features extracted from the speakers.

- Statistical analysis

- Upload the spreadsheet containing the acoustic features into the R46 environment (or any statistical software/environment of choice).

- Perform Generalized Additive Models (GAMs) non-parametric statistics.

- Perform GAMs in R.

- Type the following commands and press Enter.

library(mgcv)

model = gam(the metric/prosodic-acoustic feature in analysis ~ the Language + s(the chosen metric feature, by = the Language) + other metric/prosodic-acoustic features, data = the data frame)

NOTE: We decided to perform the test statistics of the protocol in R programming language because of its increasing popularity among phoneticians (and linguists) in the academic community. R has been largely used in phonetic fieldwork research47. Keep in mind that step 1.4.2.2 contains a pseudo-code. Write the code according to the research variables.

Figure 2: Screenshot from phonetic alignment using MAUS forced aligner. (A) The dashed rectangle is meant for dragging and dropping 'my_file.wav'/' my_file.txt' files or clicking inside for searching such files from the folder; the upload button is indicated by the red arrow. (B) The uploaded files from panel A (see blue arrow), the pipeline to be used, the language for the pairs of files, the file format to be returned, and a 'true/false' button for keeping all files. (C) The checkbox terms of Usage (see green arrow), the Run Web Services button, and the Results (TextGrid files to be downloaded). Please click here to view a larger version of this figure.

Figure 3: Screenshot of the realignment procedure. (A) Input settings form for the realignment procedure. (B) Partial waveform, broadband spectrogram with f0 (blue) contour, and six tiers segmented (and labeled) as tier 1: units of vowel onset to the next vowel onset (V_to_V); vowel onset (V_to_V); tier 2: units of vowel (V), consonant (C), and pause (#); tier 3: phonic representations V_to_V; tier 4: some words from the text; tier 5: some chunks (CH) of speech from the text; tier 6: tonal tier containing the highest (H), and the lowest (L) tone of each speech chunk produced by a female AmE-S. (C) Input settings for the automatic extraction of the acoustic features. Please click here to view a larger version of this figure.

2. Speech perception

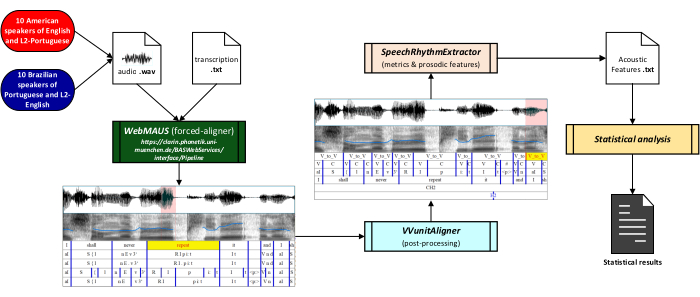

NOTE: We carried out four voice lineups in English with American listeners and four lineups in BP with Brazilian listeners. See Figure 4 for a flowchart for speech perception.

Figure 4: Schematic flowchart for speech perception. Please click here to view a larger version of this figure.

- Participants

- Choose different participants for each group from the ones who participated in the speech production protocol.

NOTE: Two groups of participants were selected for this part of the protocol: Group 1: The American English (from the U.S.A.) Listeners (AmE-L), and Group 2: The Brazilian Listeners (Bra-L). For the present research, both AmE-L and Bra-L were considered L2 proficient (both groups were qualified as B2-C136 The AmE-L had lived two years in Brazil when the procedures were conducted. The Bra-L had lived more than two years in the U.S.A when the procedures were conducted. While living abroad, both groups used to speak their L2 for studying and working purposes (at least six days a week for about 4 to 5 hours a day). - Determine the number of participants, the language (L1 English, L2 BP; L1 BP, L2 English), the sex (female or male), the age (mean, standard deviation), the group characteristics (professionals or undergraduate students) and the L2 proficiency level for each group.

- Choose different participants for each group from the ones who participated in the speech production protocol.

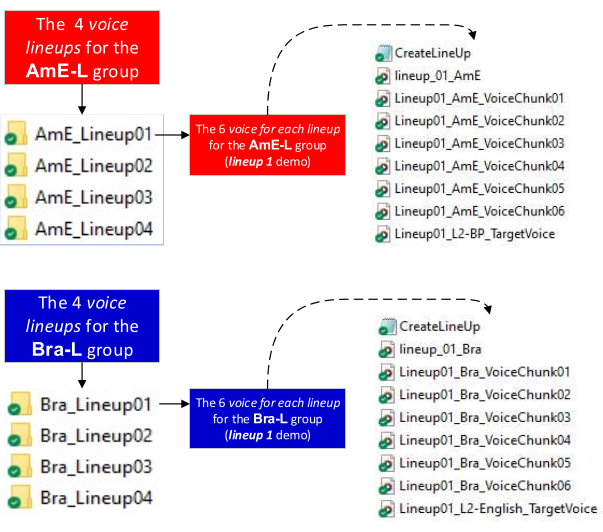

- The voice lineups

NOTE: Divide the voice lineups' procedures into two different steps: preparing and running the voice lineups.- Prepare four voice lineups for English and four for BP.

- Get audio files from the speakers of section 1: Speech production.

- Certify that the audio files of each language factor are in separate folders.

- Randomly choose six voice chunks in L1 English or L1 BP.

NOTE: Six voices in the lineup represent one target voice and five foils. - Choose a voice chunk in L2 English or L2 BP from one of the speakers included in step 2.2.1.3.

NOTE: The voice chunk in step 2.2.1.4 is the reference voice. Chunks must be approximately 20 s long.8. - Access and download the script for Praat CreateLineup48 from https://github.com/pabarbosa/prosody-scripts-CreateLineUp.

- Certify that the L2 reference voice, the L1 foils, and the L1 target voice are in the same folder before running the CreateLineup script (Figure 5).

- Open the phonetic analysis software.

- From the object window, click Praat | Open Praat script… to call the script.

- Click Run | Run.

NOTE: The script returns a file in the following order: (the L2 reference voice) + (the L1 target voice and the foils randomly distributed) (Figure 5).

- Running the voice lineups

- Create an online space to host the lineups on any platform of choice (e.g., SurveyMonkey. See the Table of Materials) for remotely conducting the voice lineups.

- Access the online space link.

- Upload the files returned from the CreateLineup script to the platform.

- Run the procedure before the participants to test every step.

NOTE: It is recommended to previously access the link and run the lineups to check if everything is working out normally.

- Prepare four voice lineups for English and four for BP.

- Statistical analysis

- Upload the spreadsheet containing the scores of the listeners' judgments into the R environment (or any statistical software/environment of choice).

- Perform the Kruskal-Wallis test in R.

- Type the following commands and press Enter.

model = kruskal.test(judgments ~ each voice lineup, data = the data frame)

- Perform a post-hoc Dunn's test.

- Perform Dunn's test in R.

- Type the following commands and press Enter.

library(FSA)

model = dunnTest(judgements ~ each voice lineup, data = data, method = "bonferroni")

NOTE: The codes in steps 2.3.1.2 and 2.3.2.2 are pseudo-codes (see NOTE 1.4.2.2).

- Upload the spreadsheet containing the scores of the listeners' judgments into the R environment (or any statistical software/environment of choice).

- Acoustic similarity analysis

- Select the lineups (cf. NOTE in step 2.2.1.3) that presented non-significant differences between the target and any of the foils.

- Repeat procedures of steps 1.3.1 to 1.3.7.5, and steps 1.3.7.8 to 1.3.7.10.

- Access and download the script for Python49, AcousticSimilarity_cosine_euclidean50 from https://github.com/leonidasjr/AcousticSimilarity/blob/main/AcousticSmilarity_cosine_euclidean.py.

NOTE: The script returns three matrices (in '.txt' and '.csv'): one for Cosine similarity51,52, one for Euclidean distance52,53, and one for Transformed Euclidean distance values, as well as a pairwise comparison between the target voice and each foil. - Certify that the script is downloaded in the same folder of the lineup dataset.

- Click on Open file… button to call the script.

- Click Run | Run Without Debugging buttons.

NOTE: The second Run button may be tagged as Run or Run Without Debugging or Run Script. They all execute the same commands. It simply depends on the Python environment used. - Perform voice similarity tests based on acoustic features.

NOTE: Cosine similarity (or cosine distance) is a technique applied in Artificial Intelligence (AI), particularly in machine learning in automatic speech recognition (ASR) systems. It is a measure of similarity between zero and one. A cosine similarity close to one means that two voices are quite likely to be similar. A cosine similarity close to zero means that the voices are quite likely to be dissimilar52. The Euclidean distance, often referred to as Euclidean similarity, is also widely used in AI, machine learning, and ASR. It represents the straight-line distance between two points in Euclidean space53, i.e., the closer the points (shorter values of distance), the more similar the voices are. For a clearer understanding of the reported results of both techniques, we performed a transformation of the Euclidean distance raw scores into values from zero (less voice similarity) to one (more voice similarity)54.

Figure 5: Directory setup for speech perception. Lineup folders. Each folder contains Six L1 voices, the L2 target voice, the "CreateLineup" script, and the voice lineup audio file (returned after running the script). Please click here to view a larger version of this figure.

Representative Results

Results for speech production

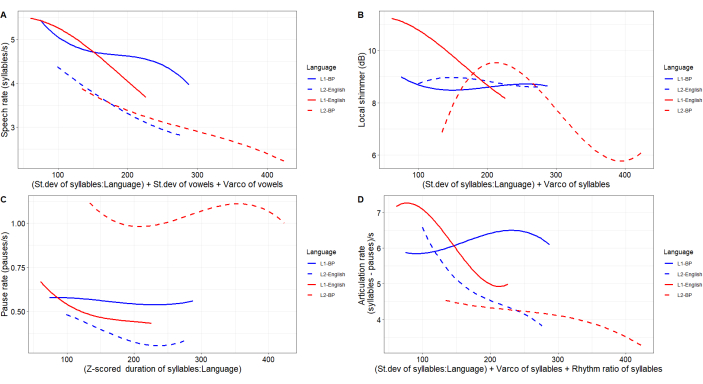

In this section, we described the performance of the statistically significant prosodic-acoustic features and rhythm metrics. Such prosodic features were speech, articulation, and pause rates, which are related to duration, and shimmer, which is related to voice quality. The rhythm metrics were standard deviation (SD) of syllable duration, SD of consonant, SD of vocalic or consonantal duration, and the variation coefficient of syllable duration (see the Supplemental Table S1).

The Speech rate (Figure 6A) decreases more rapidly for L1-L2 English than for L1-L2 BP, although the rate is lower for both L2s. The Speech rate is related to three metrics – SD of syllable duration (SD-S), SD of vowels (SD-V), and the syllabic variation coefficient (Varco-S). It is also important to keep in mind that both AmE-S, and Bra-S group are proficient in their L2s. It seems that such rate is affected by the higher values of syllable duration and the lower variability produced by L1-L2 BP, causing less-steeped slopes.

The Articulation rate (Figure 6D) is related to SD-S, Varco-S, as well as the Syllabic Rhythm Ratio (RR-S); the latter represents the ratio between shorter and longer syllables. Such metrics seem to affect the Articulation rate just like the affected Speech rate due to sentence length and variation in duration leading to higher L2 speech planning, and L2 cognitive load9,23,54. A higher cognitive-linguistic load may be required in the sentence length domain in a way that prosodic-acoustic parameters are affected55.

Regarding the prosodic-acoustic features of Speech and Articulation rates, our findings suggest that the more a speaker varies the duration of syllables (and vowels), the lower such rates. We hypothesize that the speech perception of both L1 and L2 BP sounds somewhat slower than L1 and L2-English, and that L1-English sounds faster than all other language levels. This fact might be linked to speech rhythm and the hypothesis that while L1-L2 BP leans toward the syllable-timed pole of the rhythm continuum, L1-L2 English moves toward the stress-timed pole21,22.

The Local shimmer, Figure 6B, is influenced by SD-S and Varco-S. For the Local shimmer, both productions of Bra-S, L1-BP, and L2-English present a somewhat monotonic trajectory as variability of syllable duration increases (SD and Varco, see Supplemental Table S1). The perception of vocal effort might be apparent when listening to the productions of Bra-S due to a low variation that ranges between 8.5 and 9 dB. Bra-S speech is affected by vocal load. L1-BP is highly affected by sentence length being less sensitive to high variations of syllable duration (BP rhythmic pattern shows less syllabic variation22,56).

The Pause rate (Figure 6C) shows that L2-English speakers produce longer pauses (from 200 ms to 375 ms) than the L1-English, L1-BP, and L2-BP ones (mean of 30 ms for L1-English, 50 ms for L1-BP, and 100 ms for L2-BP). Speech planning, in this case, might have affected L2-English production due to increased brain activity54,57. It is expected that higher language proficiency would result in a greater reading speed and span54; nevertheless, even for L2 proficient speakers, reading tasks presented more effort in higher-order cognitive aspects of foreign speech and in language processing54,57. Our findings show, at least on a preliminary basis, that L2-BP speakers may be less affected by pauses than L2-English due to differences in the rhythmic pattern of both languages.

Figure 6: Generalized Additive Models for the prosodic-acoustic features. On the Y-axis, the response variable (the acoustic features to be modeled); on the X-axis, the predictor variables (the factor 'Language' and the covariates in the additive models that will provide the shape and the trajectories of the curves (i.e., the smoothing effects: 'Language + covariates'), and the product of the 'Language' and a metric feature that will provide a more accurate projection of the response variable (i.e., factor-smooth interactions: 'covariate:Language'). Abbreviation: GAMs = Generalized Additive Models. Please click here to view a larger version of this figure.

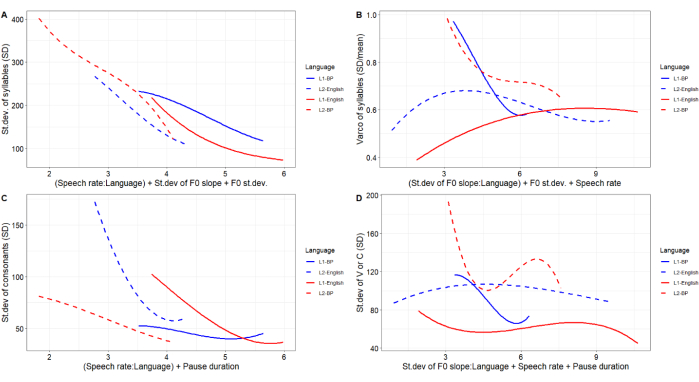

In relation to the rhythm metrics, for SD-S (Figure 7A), our findings reveal that the more a speaker varies the f0 curve and increases the speech rate, the more SD-S decreases for all the language levels (a mirror effect of Figure 6A). In the case of the SD for consonants (SD-C, Figure 7C), L1-BP, contrary to L1 English, performs low variation as Speech rate or Pause duration increases. Such SD direction is predicted since L1-English variability of syllable duration is (statistically) significantly higher than L1-BP44,58. The Varco-S (Figure 7B and Supplemental Table S1) seems somewhat correlated to the L1 and the L2 of the same language group. As the f0 variability and Speech rate increase, the Varco-S decreases for the L1-BP and seems to decrease and attenuate for L2-BP. For the English productions, while f0 variability and Speech rate increase, Varco-S increases and attenuates for L1-English and L2-English.

Regarding the SD for vowels or consonants (SD-V_C, Figure 7D and Supplemental Table S1), our results show some similarities with the Varco-S performance (Figure 7B). For instance, higher Speech rate, Pause duration, and f0 variability promote a fall-rise trajectory of the SD-V_C for L1-BP, and for the L2-BP. In English productions, while such prosodic features are higher, SD-V_C slightly decreases, attenuates for L1-English, slightly increases, and then attenuates for L2-English. The Varco-S and the SD-V_C correlation to the L1-L2 of the same language groups may be partially explained by the Lombard Effect, which refers to the alteration of acoustic parameters in speech due to background noise59.

In foreign speech, depending on the complexity of the task (e.g., read speech), speakers have used similar acoustic strategies on both L1 and L259,60. On the one hand, Marcoux and Ernestus found that f0 measures (range and median) in L2 Lombard speech suggest that L2 English speakers were influenced by their L1 Dutch61,62. On the other hand, more recent findings propose that although L2 Lombard speech is expected to differ from L1 Lombard speech due to the higher cognitive load when speaking the L2, L2 English speakers produced Lombard speech in the same direction as the L1 English speakers63. The extent to which our findings are aligned with Marcoux and Ernestus63 and diverge from Waaning59, Villegas et al.60, and Marcoux and Ernestus61,62 needs further investigation.

Figure 7: Generalized Additive Models for the metric features. On the Y-axis, the response variable (the metric features to be modeled); on the X-axis, the predictor variables (the factor 'Language' and the covariates in the additive models that will provide the shape and the trajectories of the curves (i.e., the smoothing effects: 'Language + covariates'), and the product of the 'Language' and a metric feature that will provide a more accurate projection of the response variable (i.e., factor-smooth interactions: 'covariate:Language'). Abbreviation: GAMs = Generalized Additive Models. Please click here to view a larger version of this figure.

Results for speech perception

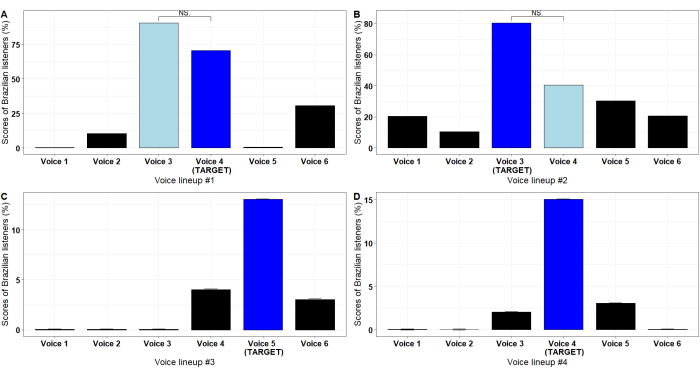

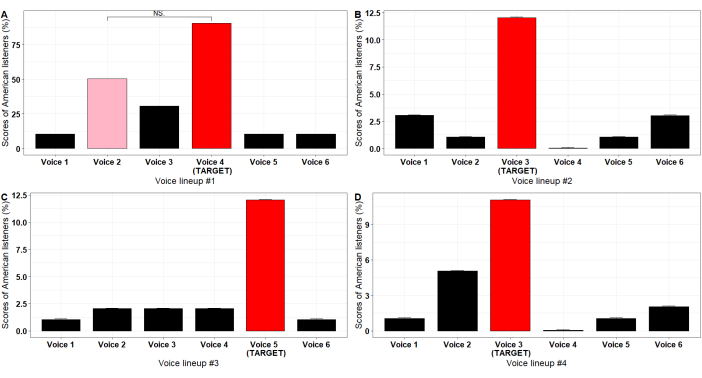

In relation to the BP voice lineups, in lineup #1 (Figure 8A), the foil voice #3 was judged as being the target voice. In fact, the target voice was the voice #4. Results show no statistically significant difference (see Supplemental Table S1) between the foil #3 (83%) and the target voice #4 (71%). In lineup #2 (Figure 8B), a comparison between the target voice #3 (40%) and the foil #4 (20%) also showed no statistically significant difference (see Supplemental Table S1) for the English voice lineups. In lineup #1 (Figure 9A), there was no significant difference (see Supplemental Table S1) between the foil #2 (26%) and the target voice #4 (54%).

In the analysis of voice similarity, both the Cosine similarity (CoSim) and the Euclidean distance (EucDist, [EucDist transformed]54) showed a consistent correlation between foil #3 and the target for the BP lineup #1 (CoSim = 0.99; EucDist = 77.4, [0.93]). Correlation between foil #4 and the target was similarly strong in the BP lineup #2 (CoSim = 0.99, EucDist = 76.3, [0.93]). In the English lineup #1, correlation was consistent for CoSim (0.83(, but weak-to-satisfactory for EucDist (213.0, [0.47]. Overall, both CoSim and EucDist were satisfactory techniques in determining voice similarity. In addition, CoSim performed slightly more effectively, as addressed by Gahman and Elangovan52(see Supplemental Table S1).

In terms of similarity, what could have led to an increase in false alarms? Prosodic-acoustic features showed evidence that, although foils and target voices performed (statistically) significantly differently-with an exception for the lineups presented in Figure 8A,B and Figure 9A-listeners' choices somewhat diversified along different foils in the other lineups (Figure 8C,D, and Figure 9B-D). Such judgment seems to depend more on one (or maybe more) specific acoustic feature that speakers share than a whole class of prosodic-acoustic features. For instance, our study presented several features (co)varying between productions; nevertheless, perceptual results show the judgments' preferences for a specific foil to match the target voice8,35,64,65. Further analyses are necessary in future work.

It is noteworthy to consider the choice for a multidimensional parametric matrix for acoustic similarity66 as the one presented in this study. Our findings are aligned with previous studies that used acoustic features based on f0, VQ, and intensity9,35. Such features are remarkably suggested for speaker comparison tasks in the forensic field9,66. It is nevertheless important to highlight that in the present research, none of the foils were predicted to be similar to the target voice, although we have used a variant of McFarlane's guidelines16,17 in the preparation of the voice lineups for foreign-accented speaker recognition. Furthermore, we must emphasize that our voice lineup procedure contains important differences from McFarlane's guidelines, including stimulus length, number of voices, selection of foils (randomized here), and speech style.

To what extent prosodic-acoustic features of f0, voice quality, and intensity seem to be related to listeners' perception would highly depend on the speaking style used in the research. Here, we used reading passages. The majority of earwitness studies opt for (semi-) spontaneous stimuli as a balanced solution-e.g., the DyVis corpus67-which has been extensively employed in contemporary earwitness investigations28,68. The reason that led us to choose the reading tasks is that our corpus, at the time of the composition of this manuscript, consisted exclusively of data obtained from reading tasks. It is, however, important to acknowledge that the process of compiling a (semi-) spontaneous corpus is already in progress. Furthermore, the act of reading a story can generate a dependable level of uniformity and variation, both intra-speaker and inter-speaker. This facilitates the emphasis on prosodic or phonetic characteristics-individual or dialectal-in situations involving voice comparison. This becomes especially noticeable in instances of vocal load during the production of a foreign accent, even among proficient speakers 8,9,23,54.

Figure 8: Bar plots containing the results for the four lineups carried out by the Brazilian listeners. (A,B) Non-significant difference between the target voice (in blue) and a foil (in light blue). (C,D) significant differences from the foils, and the target voice. Abbreviation: NS = non-significant. Please click here to view a larger version of this figure.

Figure 9: Bar plots containing the results for the four lineups carried out by the American listeners. (A) Non-significant difference between the target voice (in red) and a foil (in pink). (B,C,D) Significant differences from the foils and the target voice. Abbreviation: NS = non-significant. Please click here to view a larger version of this figure.

Supplementary Table S1: Speech production statistics – Generalized Additive Models (GAMs): The F-statistics (degrees of freedom), the adjusted R2 of each statistically significant prosodic-acoustic feature (Figure 6), and rhythm metric (Figure 7) per Language level, as well as the individual values of each smooth term (the covariates). Speech perception statistics: The Kruskall-Wallis χ2 statistics (degrees of freedom), the p-values, and the adjusted η2, as well as a post-hoc Dunn's test for pairwise comparison (Figure 8 and Figure 9) of each statistically non-significant difference between the pairs of target-foil voices (Figure 8A,B and Figure 9A). Acoustic similarity matrices for Cosine and Euclidean distance of each lineup. Please click here to view a larger version of this figure.

Discussion

The current protocol presents a novelty in the field of (forensic) phonetics. It is divided into two phases: one based on production (acoustic analysis) and one based on perception (judgement analysis). The production analysis phase comprises the Data preparation and Forced alignment, Realignment, and Automatic extraction of prosodic-acoustic features besides the statistics. This protocol connects the stage of data collection to the data analysis in a faster and more efficient way than the traditional protocols based on a predominantly manual segmentation.

However, the realignment process, shown in protocol steps 1.3.6 to 1.3.6.9, basically works on the phone and word units generated in protocol steps 1.3.1 to 1.3.4. The novelty of the realignment process is that it generates a new TextGrid containing three new text tiers: a syllabic tier, a speech chunk tier that can be generated automatically or manually based on words, and a tone tier that can be quite useful for calculating f0 measures. The realignment guidelines proposed for this protocol were previously used in studies of L2 speech rhythm21,69,70, studies for the detection of foreign accent degree using machine learning techniques22, and studies in the forensic domain9.

The automatic extraction of prosodic features, presented in protocol steps 1.3.7 to 1.3.7.10, generates a multidimensional matrix of prosodic-acoustic features as could be testified in the current research. Such features include melodic, durational, and spectral (voice quality and intensity) speech features71. A considerable number of these acoustic features are less sensitive and somewhat robust to noisy audio recordings eventually collected in the forensic or any other scenarios72. Furthermore, the multidimensional matrix can be applied to speech recognition systems via several AI techniques (work in progress). It can still be quite useful in the teaching prosodic aspects in L2 pronunciation classes21 (work in progress).

The statistical analysis of this part of the protocol represents the use of the GAM method since we dealt with a complex relationship between a specific acoustic feature and multiple language-factor speakers. To model a specific acoustic feature, for instance, it was necessary to check how other acoustic features from the matrix (the smooth terms) would perform so that we could describe more accurately what was happening during speech production.

Regarding the perception analysis, the current protocol was constituted by preparing and implementing the voice lineups, statistical analysis, and acoustic similarity analysis. To prepare the lineups, we used the CreateLineup script for Praat, which automatically generates an audio file for each lineup containing randomly sequenced foils and target voice as described in protocol steps 2.2 to 2.2.1.9.

For the statistical analysis of this protocol, we ran the Kruskal-Wallis non-parametric test since our data did not meet the Analysis of Variance (ANOVA) assumptions. Once we had a relationship between the judgment scores assessed by the listeners and controlled for the target voice and the foils, we opted to use the linear model statistics.

For the acoustic similarity analysis, we used the AcousticSmilarity_cosine_euclidean script for Python. The program pops up the computer's directory tree and asks the user to choose the file containing the prosodic-acoustic features of the foils and the target voice that composes the lineup in analysis. At a click of a button, the script generates three similarity matrices: a Cosine, a Euclidean, and a transformed (zero-to-one) Euclidean, on both '.txt' (tab-delimited) and '.csv' files. We still must keep in mind that every dataset (the '.txt' file containing the prosodic-acoustic features of the foils and the target) must be in the original folder of the lineup, and every lineup folder must have a copy of the AcousticSmilarity_cosine_euclidean script.

In summary, the current protocol advances in (forensic) phonetics research. Comprising distinct phases – production and perception analyses, as well as acoustic similarity – it bridges the gap between data collection and multidimensional acoustic-feature analysis. Researchers can benefit from a holistic perspective, exploring both production and perception aspects. Moreover, this protocol ensures research reproducibility, allowing others to build upon the guidelines of this work.

Limitations and future directions

We still must keep in mind that everything will work out successfully if the data preparation follows the guidelines proposed in protocol steps 1.3.1 to 1.3.4. Besides, in the forced alignment procedures, we must be aware that an external pre-trained forced aligner-like MAUS used in the current work-is susceptible to segmentation errors, especially in non-pretrained foreign accented data or even in pretrained aligners, whether the audio does not have a good quality or the aligner does not contain the audio language (this fact would make the expert's work more difficult and time-consuming). Forensic transcription, especially of longer audio files, encounters difficulties with respect to the accurate and reliable representation of spoken recordings72.

There are also several challenges in transcribing and aligning longer audio files. Aligner performance is impacted by the speaking style and phonetic environment, as well as dialect-specific factors. Although there are aligner-specific differences, in general, their performance is similar and generates segmentations that compare well to manual alignment overall73. In addition, L1 and L2 English production were run with a pre-trained acoustic model of American English due to the unavailability of foreign speech models in MAUS. Furthermore, there are no pretrained acoustic models for BP in MAUS. For the BP data, the pre-existing acoustic models of Italian were used (see NOTE, protocol step 1.3.5.7).

In future work (in progress), we will use the Montreal Forced Aligner (MFA)74 as part of the protocol. A great advantage of MFA is the availability of pre-trained acoustic models and grapheme-to-phoneme models for a wide variety of languages (including BP), as well as the ability to train new acoustic and grapheme-to-phoneme models to any new dataset (i.e., our foreign-accented speech corpus)75.

Offenlegungen

The authors have nothing to disclose.

Acknowledgements

This study was supported by the National Council for Scientific and Technological Development – CNPq, grant no. 307010/2022-8 for the first author, and grant no. 302194/2019-3 for the second author. The authors would like to express their sincere gratitude to the participants of this research for their generous cooperation and invaluable contributions.

Materials

| CreateLineup | Personal collection | # | Script for praat for voice lineups preparation |

| Dell I3 (with solid-state drive – SSD) | Dell | # | Laptop computer |

| Praat | Paul Boersma & David Weenink | # | Software for phonetic analysis |

| Python 3 | Python Software Foundation | # | Interpreted, high-level, general-purpose programming language |

| R | The R Project for Statistical Computing | # | Programming language for stattistical computing |

| Shure Beta SM7B | Shure | # | Microphone |

| SpeechRhythmExtractor | Personal collection | # | Script for praat for automatic extraction of acoustic features |

| SurveyMonkey | SurveyMonkey Inc. | # | Assemble of free customizable surveys, as well as a suite of back-end programs that include data analysis, sample selection, debiasing, and data representation. |

| Tascam DR-100 MKII | Tascam | # | Digital voice recorder |

| The Munich Automatic Segmentation System MAUS | University of Munich | # | Forced-aligner of audio (.wav) and linguistic information (.txt) files |

| VVUnitAligner | Personal collection | # | Script for praat for automatic realignment and post-processing of phonetic units |

Referenzen

- Moyer, A. . Foreign Accent: The Phenomenon of Non-native Speech. , (2013).

- Munro, M., Derwing, T. Foreign accent, comprehensibility and intelligibility, redux. J Second Lang Pronunciation. 6 (3), 283-309 (2020).

- Levis, J. . Intelligibility, Oral Communication, and the Teaching of Pronunciation. , (2018).

- Munro, M. . Applying Phonetics: Speech Science in Everyday Life. , (2022).

- Gut, U., Muller, C. . Speaker Classification. , 75-87 (2007).

- Rogers, H. Foreign accent in voice discrimination: a case study. Forensic Linguistics. 5 (2), 203-208 (1998).

- Solan, L., Tiersma, P. Hearing Voices: Speaker Identification in Court. Hastings Law Journal. 54, 373-436 (2003).

- Alcaraz, J. The long-term average spectrum in forensic phonetics: From collation to discrimination of speakers. Estudios de Fonética Experimental / Journal o Experimental Phonetics. 32, 87-110 (2023).

- Silva, L., Barbosa, P. A. Voice disguise and foreign accent: Prosodic aspects of English produced by Brazilian Portuguese speakers. Estudios de Fonética Experimental / Journal o Experimental Phonetics. 32, 195-226 (2023).

- Munro, M., Derwing, T. Modeling perceptions of the accentedness and comprehensibility of L2 speech. Studies in Second Language Acquisition. 23 (4), 451-468 (2001).

- Keating, P., Esposito, C. Linguistic Voice Quality. Working Papers in Phonetics. , 85-91 (2007).

- Niebuhr, O., Skarnitzl, R., Tylečková, L. The acoustic fingerprint of a charismatic voice -Initial evidence from correlations between long-term spectral features and listener ratings. Proceedings of Speech Prosody. , 359-363 (2018).

- Segundo San, E. International survey on voice quality: Forensic practitioners versus voice therapists. Estudios de Fonética Experimental. 29, 8-34 (2021).

- Farrús, M. Fusing prosodic and acoustic information for speaker recognition. International Journal of Speech, Language and the Law. 16 (1), 169 (2009).

- Farrús, M. Voice disguise in automatic speaker recognition. ACM Computing Surveys. 51 (4), 1-2 (2018).

- Nolan, F. A recent voice parade. The International Journal of Speech, Language and the Law. 10 (2), 277-291 (2003).

- McDougall, K., Bernardasci, C., Dipino, D., Garassino, D., Negrinelli, S., Pellegrino, E., Schmid, S. Ear-catching versus eye-catching? Some developments and current challenges in earwitness identification evidence. Speaker Individuality in Phonetics and Speech Sciences. , 33-56 (2021).

- Gut, U. Rhythm in L2 speech. Speech and Language Technology. 14 (15), 83-94 (2012).

- Urbani, M. Pitch Range in L1/L2 English. An Analysis of F0 using LTD and Linguistic Measures. Coop. , (2012).

- Gonzales, A., Ishihara, S., Tsurutani, C. Perception modeling of native and foreign-accented Japanese speech based on prosodic features of pitch accent. J Acoust Soc Am. 133 (5), 3572 (2013).

- Silva, L., Barbosa, P. A. Speech rhythm of English as L2: an investigation of prosodic variables on the production of Brazilian Portuguese speakers. J Speech Sci. 8 (2), 37-57 (2019).

- Silva, L., Barbosa, P. A. Foreign accent and L2 speech rhythm of English a pilot study based on metric and prosodic parameters. 1, 41-50 (2023).

- Costa, A. . El cerebro bilingüe: La neurociencia del lenguaje. , (2017).

- Eriksson, A. Tutorial on forensic speech science: Part I. Forensic Phonetics. , (2005).

- Harvey, M., Giroux, M., Price, H. Lineup size influences voice identification accuracy. Applied Cognitive Psychology. 37 (5), 42-89 (2023).

- Pautz, N., et al. Identifying unfamiliar voices: Examining the system variables of sample duration and parade size. Q J Exp Psychol (Hove). 76 (12), 2804-2822 (2023).

- McDougall, K., Nolan, F., Hudson, T. Telephone transmission and earwitnesses: Performance on voice parades controlled for voice similarity. Phonetica. 72 (4), 257-272 (2015).

- Nolan, F., McDougall, K., Hudson, T. Some acoustic correlates of perceived (dis) similarity between same-accent voices. Proceedings of the International Congress of Phonetic Sciences (ICPhS 2011). , 1506-1509 (2011).

- Sheoran, S., Mahna, D. Voice identification and speech recognition: an arena of voice acoustics. Eur Chem Bull. 12 (5), 50-60 (2023).

- Hudson, T., McDougall, K., Hughes, V., Knight, R. -. A., Setter, J. Forensic phonetics. The Cambridge Handbook of Phonetics. , 631-656 (2021).

- Eriksson, A., Llamas, C., Watt, D. The disguised voice: Imitating accents or speech styles and impersonating individuals. Language and identities. , 86-96 (2010).

- Köster, O., Schiller, N. Different influences of the native language of a listener on speaker recognition. Forensic Linguistics. 4 (1), 18-27 (1997).

- Köster, O., Schiller, N., Künzel, H. The influence of native-language background on speaker recognition. , 306-309 (1995).

- Thompson, C. P. A language effect in voice identification. Applied Cognitive Psychology. 1, 121-131 (1987).

- San Segundo, E., Univaso, P., Gurlekian, J. Sistema multiparamétrico para la comparación forense de hablantes. Estudios de Fonética Experimental. 28, 13-45 (2019).

- Council of Europe. . Common European Framework of Reference for Languages: Learning, Teaching, Assessment. , (2001).

- . How to use Tascam DR-100 MKII: Getting started Available from: https://www.youtube.com/watch?v=O2E72uV9fWc (2018)

- . Getting the most from your Shure SM58 microphone Available from: https://www.youtube.com/watch?v=wweNufW7EXA (2020)

- Multilingual processing of speech via web services. Computer Speech & Language Available from: https://clarin.phonetik.uni-muenchen.de/BASWebServices/interface/WebMAUSBasic (2017)

- Escudero, P., Boersma, P., Rauber, A., Bion, R. A cross-dialect acoustic description of vowels: Brazilian and European Portuguese. J Acoust Soc Am. 126 (3), 1379-1393 (2009).

- Stevens, M., Hajek, J. Post-aspiration in standard Italian: some first cross-regional acoustic evidence. , 1557-1560 (2011).

- Barbosa, P., Madureira, S. . Manual de Fonética Acústica Experimental: aplicações a dados do português. , (2015).

- . Praat: Doing phonetics by computer. (Version 6.1.38) [Computer program] Available from: https://www.praat.org/ (1992-2021)

- . VVUnitAligner. [Computer program] Available from: https://github.com/leonidasjr/VVunitAlignerCode_webMAUS (2022)

- . SpeechRhythmExtractor [Computer program] Available from: https://github.com/leonidasjr/VowelCode (2019-2023)

- . A language and environment for statistical computing. R Foundation for Statistical Computing Available from: https://www.R-project.org/ (2023)

- Pigoli, D., Hadjipantelis, P. Z., Coleman, J. Z., Aston, J. The statistical analysis of acoustic phonetic data. Journal of the Royal Statistical Society. 67 (5), 1103-1145 (2018).

- CreateLineup [Computer program]. Available from: https://github.com/pabarbosa/prosody-scripts-CreateLineUp (2021)

- Van Rossum, G., Drake, F. L. . Python 3 Reference Manual. , (2009).

- . AcousticSimilarity_cosine_euclidean. [Computer program] Available from: https://github.com/leonidasjr/AcousticSimilarity/blob/main/AcousticSmilarity_cosine_euclidean.py (2024)

- Gerlach, L., McDougall, K., Kelly, F., Alexander, A. Automatic assessment of voice similarity within and across speaker groups with different accents. , 3785-3789 (2023).

- Gahman, N., Elangovan, V. A comparison of document similarity algorithms. International Journal of Artificial Intelligence and Applications. 14 (2), 41-50 (2023).

- San Segundo, E., Tsanas, A., Gómez-Vilda, P. Euclidean distances as measures of speaker similarity including identical twin pairs: A forensic investigation using source and Filter voice characteristics. Forensic Science International. 270, 25-38 (2017).

- Singh, M. K., Singh, N., Singh, A. K. Speaker’s voice characteristics and similarity measurement using Euclidean distances. , 317-322 (2019).

- Darling-White, M., Banks, W. Speech rate varies with sentence length in typically developing children. J Speech Lang Hear Res. 64 (6), 2385-2391 (2021).

- Barbosa, P. A. . Incursões em torno do ritmo da Fala. , (2006).

- Golestani, N., Pallier, C. Anatomical correlates of foreign speech sound production. Cereb Cortex. 17 (4), 929-934 (2007).

- Trouvain, J., Fauth, C., Möbius, B. Breath and non-breath pauses in fluent and disfluent phases of German and French L1 and L2 read speech. Proceedings of Speech Prosody. , 31-35 (2016).

- Waaning, J. . The Lombard effect: the effects of noise exposure and being instructed to speak clearly on speech acoustic parameters. [Master’s thesis]. , (2021).

- Villegas, J., Perkins, J., Wilson, I. Effects of task and language nativeness on the Lombard effect and on its onset and offset timing. J Acoust Soc Am. 149 (3), 1855 (2021).

- Marcoux, K., Ernestus, M. Differences between native and non-native Lombard speech in terms of pitch range. Proceedings of the 23rd International Congress on Acoustics(ICA 2019). , 5713-5720 (2019).

- Marcoux, K., Ernestus, M. Pitch in native and non-native Lombard speech. Proceedings of the International Congress of Phonetic Sciences (ICPhS 2019). , 2605-2609 (2019).

- Marcoux, K., Ernestus, M. Acoustic characteristics of non-native Lombard speech in the DELNN corpus. Journal of Phonetics. 102, 1-25 (2024).

- Gil, J., San Segundo, E., Garayzábal, M., Jiménez, M., Reigosa, M. La cualidad de voz en fonética judicial [Voice quality in judicial phonetics]. Lingüística Forense: la Lingüística en el ámbito legal y policial. , 154-199 (2014).

- Gil, J., San Segundo, E., Penas-báñez, M. A. El disimulo de la cualidad de voz en fonética judicial: Estudio perceptivo de la hiponasalidad [Voice quality disguise in judicial phonetics: A perceptual study of hyponasality. Panorama de la fonética española actual. , 321-366 (2013).

- Passeti, R., Madureira, S., Barbosa, P. What can voice line-ups tell us about voice similarity. Proceedings of the International Congress of Phonetic Sciences (ICPhS 2023). , 3765-3769 (2023).

- Nolan, F. The DyViS database: Style-controlled recordings of 100 homogeneous speakers for forensic phonetic research. International Journal of Speech Language and the Law. 16 (1), 31-57 (2009).

- Caroll, D. . Psychology of Language. , (1994).

- Ortega-Llebaria, M., Silva, L., Nagao, J. Macro- and micro-rhythm in L2 English: Exploration and Refinement of Measures. Proceedings of the International Congress of Phonetic Sciences (ICPhS 2023). , 1582-1586 (2023).

- Fernández-Trinidad, M. Hacia la aplicabilidad de la cualidad de la voz en fonética judicial. Loquens. 9 (1-2), 1-11 (2022).

- Brouwer, S. The role of foreign accent and short-term exposure in speech-in-speech recognition. Atten Percep Psychophys. 81, 2053-2062 (2019).

- Love, R., Wright, D. Specifying challenges in transcribing covert recordings: Implications for forensic transcription. Front Commun. 6, 1-14 (2021).

- Meer, P. Automatic alignment for New Englishes: Applying state-of-the-art aligners to Trinidadian English. J Acoust Soc Am. 147 (4), 2283-2294 (2020).

- McAuliffe, M., Socolof, M., Mihuc, S., Wagner, M., Sonderegger, M. Montreal Forced Aligner: trainable text-speech alignment using Kaldi. , 498-502 (2017).

- . Montreal Forced Aligner: MFA Tutorial Available from: https://zenodo.org/records/7591607 (2023)

.